Recently while working with a client, a requirement for global navigation in their new SharePoint 2010 farm came up. They have approximately 10 web applications with a minimum of 10 site collections per web application, so manually maintaining the navigation at each site collection was out of the question. I therefore started to look at the usual suspects for global nav. I went over the SiteMapDataSource, SharePoint 2010 Navigation Menu, and multiple other custom solutions with them. Each one had its benefits and drawbacks but we had just about settled on a robust custom solution when an idea came to me while watching a St. Louis Cardinals baseball game (not important to the solution). What about the Managed Metadata Service? We were only going to have one service for the whole farm and pretty much as long as the farm is up, so is the service (i.e. not dependent on another web application). So I started looking…

There is a label that could be used as the display name, a large text box for description that could be used for the URL and built in custom sorting and if needed could support multiple languages in the future. Once I determined that, it was just a matter of some custom code and POC testing.

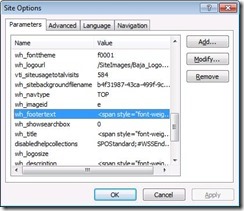

I started by setting up a Group, Term Set and the nested Terms that would make up my navigation. Assigning each Term a “Default Label”, populating the “Description” if I want it to be a link or leaving it blank if it was just a container and unchecking “Available for Tagging” for Terms but keeping it checked for the Term Set (more on that later):

Next enter Visual Studio:

The Microsoft.SharePoint.Taxonomy namespace / assembly has the classes we need to pull our Managed Metadata and is fairly easy to use. We start by getting the Terms we are looking for from the service:

TaxonomySession session = new TaxonomySession(SPContext.Current.Site);

var termStore = session.DefaultSiteCollectionTermStore;

var tsc = termStore.GetTermSets("GlobalNavigation", 1033);

if (tsc.Count > 0)

{

var tsGlobal = tsc[0];

var tc = tsGlobal.GetAllTerms();

var items = _buildMenu(tc, Guid.Empty,

tsGlobal.CustomSortOrder == null ? new List() : tsGlobal.CustomSortOrder.Split(':').ToList());

_renderMenu(items);

}

Notice I did have to hardcode the name of my Term Set “GlobalNavigation” but everything else is pretty generic. I am assuming 1 Term Set in the entire service named GlobalNavigation but you could also narrow by the Group first to make sure. Also notice the strange parameter call to my _buildMenu function. When custom sorting is used the items are not returned sorted and there is not a property on a Term with its sort order, but instead the parent container (TermSet in this case) has a property called CustomSortOrder that is a string of guids separated by semicolons. Therefore I split the string into a list correctly sorted for use in the function.

To build the structure of my navigation I created a private class to maintain the information:

private class _menu

{

public Term item {get; set;}

public List<_menu> children { get; set; }

public int order {get; set;}

public _menu(Term i)

{

item = i;

order = 0;

}

}

Then it was just a matter of parsing the terms and putting them in the right order:

private List<_menu> _buildMenu(TermCollection terms, Guid parent, List sortOrder)

{

var ret = new List<_menu>();

IEnumerable childTerms = null;

if (parent != Guid.Empty)

{

childTerms = from k in terms

where k.Parent != null && k.Parent.Id == parent && !k.IsDeprecated

orderby k.CustomSortOrder

select k;

}

else

{

childTerms = from k in terms

where k.Parent == null && !k.IsDeprecated

orderby k.CustomSortOrder

select k;

}

foreach (Term child in childTerms)

{

var newItem = new _menu(child);

if (sortOrder != null && sortOrder.Count > 0)

newItem.order = sortOrder.IndexOf(child.Id.ToString());

//Find this items sub terms - Recursion Rocks!

newItem.children = _buildMenu(terms, child.Id,

newItem.item.CustomSortOrder == null ? new List() : newItem.item.CustomSortOrder.Split(':').ToList());

ret.Add(newItem);

}

return (from r in ret

orderby r.order, r.item.Name

select r).ToList<_menu>();

}

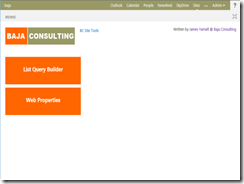

Once you get the List of _menu items you just have to use your favorite menu rendering technique, apply a little CSS, add your new control to your master page solution (or hack with SPD) and you have a menu based on your Term Set:

Advantages:

- “Term store management” is available to the contributors in each site collection as long as they can get to site settings

- The Term Store is available across the entire farm without being dependent on a web application

- Seems very fast (so far)but add caching to your control just to be sure

Quirks / Disadvantages:

- You must make the Term Set “Available for Tagging” so everyone can see it. This might confuse people if they go to use the Terms in a normal way and see our Term Set. However, since we unchecked the tagging option for each Term, they really can’t do anything with the Term Set.

- To get the custom sorting to work properly, every time you add a new Term you must change the sort order, save it and then change it back to get the CustomSortOrder to populate correctly.

- This does not security trim but that could be added.

- Requires custom code

Summary:

While I just proved this out this week and have not implemented in production yet, I have tested on multiple web applications and site collections including anonymous and so far so good. The client flipped over the maintainability and “fool proof” nature of this solution but I am still searching for holes. If you have any questions or comments, especially any inherent flaw, please let me know.

As always, I am a consult available to help implement / customize the full solution. However, if enough people find it useful I would be happy to create a Codeplex solution. This information is for discussion and education purposes only and is in no way guaranteed.

Cheers,

James